One of the best ways to get started with CHAI3D is to explore the different examples that come with the framework. As you explore each one of them you will quickly discover that all examples share a very similar structure and code base with minor variations between the examples.

In the following section we explore example 11-effects and comment on some of the important sections of the code. Compiling and modifying existing examples is the best way to familiarize yourself with the different concepts and functionalities of the library.

Almost every application begins by creating a world in which objects, light sources, cameras, and tools can be inserted. The world acts as the root node of the entire scene graph. A uniform background color can be also assigned, though by default it is set to black. For more advanced background schemes, you can explore the 2D widgets (see class cBackground).

To graphically render a view of the world inside a window display, we need to define at least one camera that captures the world from a desired location. After creating a camera, we insert it in the world. The camera now becomes a child node of the world.

CHAI3D offers some powerful capabilities to render scenes in stereo mode and on mirrored displays. Different stereo modes exist depending of the type of 3D display you own. If you are using a 3D television display, then setting the display mode to C_STEREO_PASSIVE_TOP_BOTTOM is probably your best option. You may also have to adjust settings on your television or monitor to activate the correct mode. Check the documentation of your TV or monitor to learn more about enabling 3D settings.

If your scene contains any transparent objects, then enabling multi-pass rendering can greatly improve the overall quality of the output image. This mode renders the scene in multiple passes by drawing all of the opaque objects first, followed by the transparent ones.

For the camera to "see" the objects in the scene, at least one light source needs to be added to the world. In this example we create a directional light source. The default light color is white, but can be changed to any value. See the section about lighting for more information.

Next, we instantiate a haptic device handler which gives us access to all of the haptic devices connected to the computer. In this example, we select the first haptic device available from the list.

In CHAI3D, a tool acts as a visual avatar that represents the haptic device in the world. The simplest tool is a 3D cursor, which is symbolized by a small sphere. After creating the tool, a haptic device is attached to the tool to control the position of the avatar. In the case of a cursor, we also define its radius as well as the size of the workspace in the virtual world.

If you prefer to keep a one to one scale factor between the haptic device and the virtual workspace, then you may call the following method:

For each object in the scene, you can assign physical haptic properties such as, for instance, stiffness or friction. So that your application operates in a stable way on all haptic devices, you can assign haptic properties that are scaled according to the specifications and limits of your haptic device:

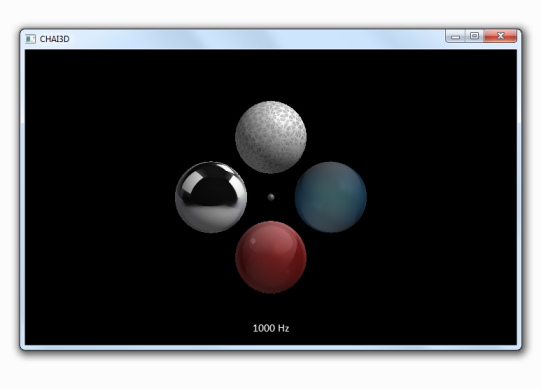

Next, we create a spherical shape primitive that is inserted and positioned inside the world:

Material and texture properties are assigned to the object:

Haptic properties are assigned through the material class:

Once the haptic values are defined in the material class, we still need to enable the effects that will use these values. In this example, we enable three haptic effects that occur when the tool touches the sphere. If the effects are not initialized, then the haptic values assigned to the material class are simply ignored.

To display any 2D information in a scene, we use widgets. In this example, we first define a font and create a label that display the rate at which the simulation is running. The label is placed on the front layer of the camera and will be displayed in overlay to the world.

Now that the scene is built we can start a haptics thread (> 1000 Hz) which runs independently from the much slower graphics thread (20-50 Hz).

Finally, here is a snapshot of the haptics loop which runs at best effort. At every iteration, the position in global coordinates is computed for every object in the world. The position of the tool is then updated by reading the sensors of the haptic device. The interaction forces between the tool and the world are computed, and the resulting force is sent back to the haptic device for display.